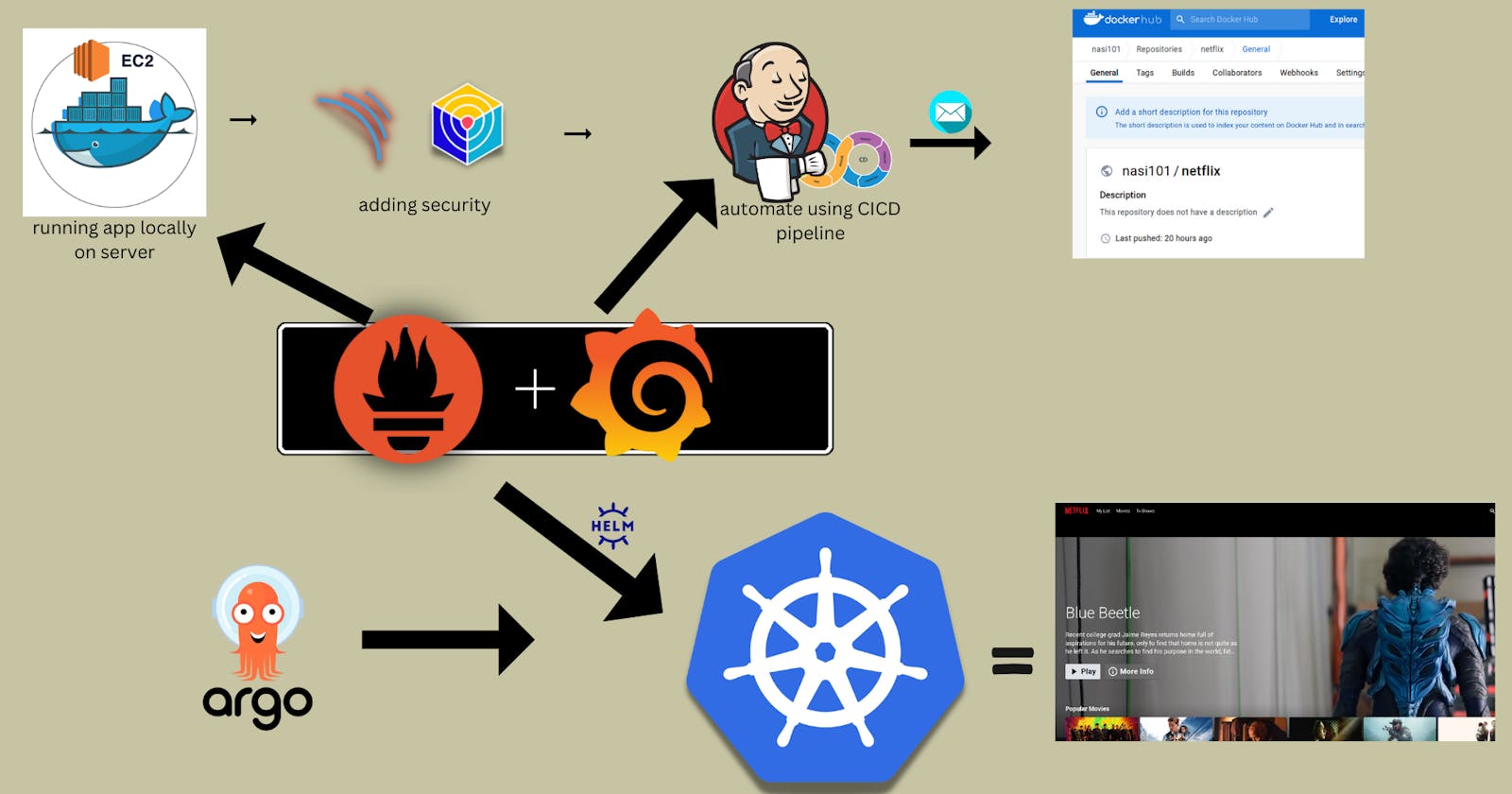

This project aims to deploy a Netflix Clone application using a DevSecOps approach, integrating a variety of tools and practices for secure, automated, and monitored software delivery. The deployment takes place on AWS, utilizing Docker for containerization, Trivy and OWASP for security checks, Jenkins for automation, SonarQube for code analysis, and Grafana with Prometheus for monitoring.

Tools Utilized:

AWS (Amazon Web Services):

- Cloud infrastructure for hosting the Netflix Clone application.

Docker:

- Containerization platform to package and deploy the application consistently across environments.

Trivy:

- Vulnerability scanner for containers and applications, ensuring security during the deployment.

OWASP (Open Web Application Security Project):

- Security practices and tools to identify and mitigate security risks in the application.

Jenkins:

- Automation server used for continuous integration and continuous delivery (CI/CD) pipelines.

SonarQube:

- A platform for continuous inspection of code quality, identifying and fixing code issues.

Grafana:

- Open-source analytics and monitoring platform, used for visualizing metrics and logs.

Prometheus:

- Open-source monitoring and alerting toolkit, integrated with Grafana for comprehensive monitoring.

Phase 1: Initial Setup and Deployment

Step 1: Launch EC2 (Ubuntu 22.04)

Provision a t2.large EC2 instance on AWS with Ubuntu 22.04 and 25 GB of RAM.

Connect to the instance using SSH.

Step 2: Clone the Code

Update all packages on the EC2 instance.

Clone the application's code repository onto the EC2 instance.

sudo apt update git clone https://github.com/Aman-Awasthi/DevSecOps-Project.git

Step 3: Install Docker and Run the App Using a Container:

- Set up Docker on the EC2 instance:

sudo apt-get update

sudo apt-get install docker.io -y

sudo usermod -aG docker $USER

newgrp docker

sudo chmod 777 /var/run/docker.sock

Step 4: Write the Dockerfile

FROM node:16.17.0-alpine as builder

WORKDIR /app

COPY ./package.json .

COPY ./yarn.lock .

RUN yarn install

COPY . .

ARG TMDB_V3_API_KEY

ENV VITE_APP_TMDB_V3_API_KEY=${TMDB_V3_API_KEY}

ENV VITE_APP_API_ENDPOINT_URL="https://api.themoviedb.org/3"

RUN yarn build

FROM nginx:stable-alpine

WORKDIR /usr/share/nginx/html

RUN rm -rf ./*

COPY --from=builder /app/dist .

EXPOSE 80

ENTRYPOINT ["nginx", "-g", "daemon off;"]

Step 5: Get the API Key:

Open a web browser and navigate to TMDB (The Movie Database) website.

Click on "Login" and create an account.

Once logged in, go to your profile and select "Settings."

Click on "API" from the left-side panel.

Create a new API key by clicking "Create" and accepting the terms and conditions.

Provide the required basic details and click "Submit."

You will receive your TMDB API key.

We will use this API key in our project to get the videos from this database.

- Run the command to build the container, and replace the API key value received from the TMDB Database.

docker build --build-arg TMDB_V3_API_KEY=<your-api-key> -t netflix .

- Open necessary ports (8081 for the application, 8080 for Jenkins, 9000 for SonarQube) in the EC2 security group.

- Run the command to run the container -

docker run -d -p 8081:80 netflix:latest

- Our application should be up and running. Access the application using

publicIP:8081.

Phase 2: Security

Install SonarQube and Trivy

- Install SonarQube.

docker run -d --name sonar -p 9000:9000 sonarqube:lts-community

- SonarQube should be accessible at

publicIP:9000with initial credentials as admin/admin.

- Install Trivy.

sudo apt-get install wget apt-transport-https gnupg lsb-release

wget -qO - https://aquasecurity.github.io/trivy-repo/deb/public.key | sudo apt-key add -

echo deb https://aquasecurity.github.io/trivy-repo/deb $(lsb_release -sc) main | sudo tee -a /etc/apt/sources.list.d/trivy.list

sudo apt-get update

sudo apt-get install trivy

To scan using Trivy, run the command - trivy fs . or for container scanning - trivy image <image name/id>

We will integrate Sonarqube while doing the Jenkins Configurations.

Phase 3: CI/CD Setup

Install Jenkins for Automation

- Install Java and Jenkins.

#java

sudo apt update

sudo apt install fontconfig openjdk-17-jre

java -version

#jenkins

sudo wget -O /usr/share/keyrings/jenkins-keyring.asc \

https://pkg.jenkins.io/debian/jenkins.io-2023.key

echo deb [signed-by=/usr/share/keyrings/jenkins-keyring.asc] \

https://pkg.jenkins.io/debian binary/ | sudo tee \

/etc/apt/sources.list.d/jenkins.list > /dev/null

sudo apt-get update

sudo apt-get install jenkins

sudo systemctl start jenkins

sudo systemctl enable jenkins

- Access Jenkins in a web browser using

publicIp:8080. Retrieve the initial password and install the suggested plugins.

- Get the password from the provided location to log in to Jenkins

- Install the suggested plugins

- Create the first Admin user and make sure to remember the password

- Jenkins is logged in, up and running.

Install necessary plugins in Jenkins.

Eclipse Temurin Installer

SonarQube Scanner

NodeJs Plugin

Configure Java and Nodejs in Global Tool Configuration

Navigate to Global Tool Configuration:

Open Jenkins and go to "Manage Jenkins" on the left-hand side.

Click on "Global Tool Configuration."

Scroll down to the "JDK" section, click on "Add JDK," and select "Install automatically."

Select "17" for the version, provide a name like "jdk17," and click on "Apply" and "Save."

Similarly, scroll down to the "NodeJS" section, click on "Add NodeJS," and select "Install automatically."

Select "16.2.0" for the version, provide a name like "node16," and click on "Apply" and "Save."

SonarQube Jenkins Configuration

Generate SonarQube Token:

Log in to SonarQube.

Go to "Administration" -> "Security" -> "User."

Update Tokens -> Generate Token.

- Go to Projects -> Create Manually -> Locally -> Use existing token -> Given token which we generated in the earlier step.

Configure SonarQube Credentials in Jenkins:

In Jenkins, go to "Manage Jenkins" -> "Credentials" -> "System" -> "Global credentials."

Add new credentials with "Kind" as "Secret Text" and use the SonarQube token generated.

Configure SonarQube Server in Jenkins:

Go to "Manage Jenkins" -> "System" -> "SonarQube servers."

Add SonarQube server with a name like "sonar-server," provide the URL with port, and add the authorization token.

- Select "Install automatically" for the SonarQube scanner.

Jenkins Plugin Installation

Install Additional Jenkins Plugins:

Go to "Manage Jenkins" -> "Manage Plugins" -> "Available."

Install the following plugins:

OWASP Dependency-Check

Docker

Docker Commons

Docker Pipeline

Docker API

Docker Build Step

DockerHub Credentials Setup

Navigate to Jenkins Credentials:

- Go to "Manage Jenkins" -> "Manage Credentials."

Add DockerHub Credentials:

- Click on "Global credentials (unrestricted)" and then "Add Credentials."

Select Credential Kind:

- Choose "Username with password" in the "Kind" dropdown.

Provide DockerHub Details:

- Enter the DockerHub username and password.

Save Credentials:

- Click "OK" to save DockerHub credentials.

Configure Dependency-Check Tool:

After installing the Dependency-Check plugin, you need to configure the tool.

Go to "Dashboard" → "Manage Jenkins" → "Global Tool Configuration."

Find the section for "OWASP Dependency-Check."

Add the tool's name, e.g., "DP-Check."

Save your settings.

Similarly, find the section for "Docker Installation"

Add the tool's name, e.g., "docker."

Download from docker.com

Apply and Save your settings.

Create Pipeline -

Jenkins Pipeline Configuration

Create Jenkins Pipeline:

- Create a new pipeline in Jenkins and use the following script.

pipeline{

agent any

tools{

jdk 'jdk17'

nodejs 'node16'

}

environment {

SCANNER_HOME=tool 'sonar-scanner'

}

stages {

stage('clean workspace'){

steps{

cleanWs()

}

}

stage('Checkout from Git'){

steps{

git branch: 'main', url: 'https://github.com/N4si/DevSecOps-Project.git'

}

}

stage("Sonarqube Analysis "){

steps{

withSonarQubeEnv('sonar-server') {

sh ''' $SCANNER_HOME/bin/sonar-scanner -Dsonar.projectName=Netflix \

-Dsonar.projectKey=Netflix '''

}

}

}

stage("quality gate"){

steps {

script {

waitForQualityGate abortPipeline: false, credentialsId: 'Sonar-token'

}

}

}

stage('Install Dependencies') {

steps {

sh "npm install"

}

}

stage('OWASP FS SCAN') {

steps {

dependencyCheck additionalArguments: '--scan ./ --disableYarnAudit --disableNodeAudit', odcInstallation: 'DP-Check'

dependencyCheckPublisher pattern: '**/dependency-check-report.xml'

}

}

stage('TRIVY FS SCAN') {

steps {

sh "trivy fs . > trivyfs.txt"

}

}

stage("Docker Build & Push"){

steps{

script{

withDockerRegistry(credentialsId: 'dockerhub', toolName: 'docker'){

sh "docker build --build-arg TMDB_V3_API_KEY=fdfa39328cbe33102941cd4a131a9207 -t netflix ."

sh "docker tag netflix amana6420/netflix:latest "

sh "docker push amana6420/netflix:latest "

}

}

}

}

stage("TRIVY"){

steps{

sh "trivy image amana6420/netflix:latest > trivyimage.txt"

}

}

stage('Deploy to container'){

steps{

sh 'docker run -d -p 8081:80 amana6420/netflix:latest'

}

}

}

}

- This script includes stages like clean workspace, checkout from Git, SonarQube analysis, quality gate, install dependencies, OWASP FS scan, TRIVY FS scan, Docker build & push, TRIVY, and deploy to the container.

Save and Run the Pipeline:

- Save the pipeline script and run the pipeline. Ensure that SonarQube analysis, dependency checks, and Docker build are successful.

Verify Results:

Check SonarQube for analysis results and vulnerabilities.

Verify that dependency check files and Docker images are pushed to Docker Hub.

The application should be up and running on your EC2 instance.

SonarQube -

Dependency check Results -

On our EC2 machines, we will get trivy and dependency check files in the project pipeline path: /var/lib/jenkins/workspace/Netflix-Clone-Pipeline

DockerHub -

This completes the configuration and execution of the CI/CD pipeline for the Netflix Clone application. Our application is up and running fine.

Phase 4: Adding Monitoring with Prometheus and Grafana

Launch EC2 Instance

- Launch a t2.medium EC2 instance with 20 GB storage.

Install Prometheus and Grafana:

- Set up Prometheus and Grafana to monitor your application.

Installing Prometheus:

- First, create a dedicated Linux user for Prometheus and download Prometheus:

sudo useradd --system --no-create-home --shell /bin/false prometheus

wget https://github.com/prometheus/prometheus/releases/download/v2.47.1/prometheus-2.47.1.linux-amd64.tar.gz

- Extract Prometheus files, move them, and create directories:

tar -xvf prometheus-2.47.1.linux-amd64.tar.gz

cd prometheus-2.47.1.linux-amd64/

sudo mkdir -p /data /etc/prometheus

sudo mv prometheus promtool /usr/local/bin/

sudo mv consoles/ console_libraries/ /etc/prometheus/

sudo mv prometheus.yml /etc/prometheus/prometheus.yml

- Set ownership for directories:

sudo chown -R prometheus:prometheus /etc/prometheus/ /data/

- Create a systemd unit configuration file for Prometheus:

sudo vi /etc/systemd/system/prometheus.service

- Add the following content to the

prometheus.servicefile:

[Unit]

Description=Prometheus

Wants=network-online.target

After=network-online.target

StartLimitIntervalSec=500

StartLimitBurst=5

[Service]

User=prometheus

Group=prometheus

Type=simple

Restart=on-failure

RestartSec=5s

ExecStart=/usr/local/bin/prometheus \

--config.file=/etc/prometheus/prometheus.yml \

--storage.tsdb.path=/data \

--web.console.templates=/etc/prometheus/consoles \

--web.console.libraries=/etc/prometheus/console_libraries \

--web.listen-address=0.0.0.0:9090 \

--web.enable-lifecycle

[Install]

WantedBy=multi-user.target

Here's a brief explanation of the key parts in this prometheus.service file:

UserandGroupspecify the Linux user and group under which Prometheus will run.ExecStartis where you specify the Prometheus binary path, the location of the configuration file (prometheus.yml), the storage directory, and other settings.web.listen-addressconfigures Prometheus to listen on all network interfaces on port 9090.web.enable-lifecycleallows for the management of Prometheus through API calls.

Enable and start Prometheus:

sudo systemctl enable prometheus

sudo systemctl start prometheus

- Verify Prometheus's status:

sudo systemctl status prometheus

We can access Prometheus in a web browser using your server's IP and port 9090:

http://<your-server-ip>:9090

Installing Node Exporter:

- Create a system user for Node Exporter and download Node Exporter:

sudo useradd --system --no-create-home --shell /bin/false node_exporter

wget https://github.com/prometheus/node_exporter/releases/download/v1.6.1/node_exporter-1.6.1.linux-amd64.tar.gz

- Extract Node Exporter files, move the binary, and clean up:

tar -xvf node_exporter-1.6.1.linux-amd64.tar.gz

sudo mv node_exporter-1.6.1.linux-amd64/node_exporter /usr/local/bin/

rm -rf node_exporter*

- Create a systemd unit configuration file for Node Exporter:

sudo nano /etc/systemd/system/node_exporter.service

- Add the following content to the

node_exporter.servicefile:

[Unit]

Description=Node Exporter

Wants=network-online.target

After=network-online.target

StartLimitIntervalSec=500

StartLimitBurst=5

[Service]

User=node_exporter

Group=node_exporter

Type=simple

Restart=on-failure

RestartSec=5s

ExecStart=/usr/local/bin/node_exporter --collector.logind

[Install]

WantedBy=multi-user.target

Replace --collector.logind with any additional flags as needed.

- Enable and start Node Exporter:

sudo systemctl enable node_exporter

sudo systemctl start node_exporter

- Verify the Node Exporter's status:

sudo systemctl status node_exporter

- We can access Node Exporter metrics in Prometheus.

Configure Prometheus Plugin Integration:

- Integrate Jenkins with Prometheus to monitor the CI/CD pipeline.

Prometheus Configuration:

- To configure Prometheus to scrape metrics from Node Exporter and Jenkins, you need to modify the

prometheus.ymlfile from the path /etc/prometheus. Here is an exampleprometheus.ymlconfiguration for your setup:

global:

scrape_interval: 15s

scrape_configs:

- job_name: 'node_exporter'

static_configs:

- targets: ['localhost:9100']

- job_name: 'jenkins'

metrics_path: '/prometheus'

static_configs:

- targets: ['<your-jenkins-ip>:<your-jenkins-port>']

- Make sure to replace

<your-jenkins-ip>and<your-jenkins-port>with the appropriate values for your Jenkins setup.

- Check the validity of the configuration file:

promtool check config /etc/prometheus/prometheus.yml

- Reload the Prometheus configuration without restarting:

curl -X POST http://localhost:9090/-/reload

- You can access Prometheus targets at:

http://<your-prometheus-ip>:9090/targets

- In the below screenshot, node exporter and Prometheus are showing up but Jenkins is showing down as we need to configure it from the Jenkins end.

Prometheus Plugin Integration in Jenkins:

Install the "Prometheus Metrics" plugin in Jenkins.

Restart Jenkins.

Go to Manage Jenkins > System > Prometheus.

Set the path as "prometheus" and save changes.

- Now our Jenkins is showing up in Prometheus targets.

Install Grafana on Ubuntu 22.04 and Set it up to work with Prometheus

Step 1: Install Dependencies:

First, ensure that all necessary dependencies are installed:

sudo apt-get update

sudo apt-get install -y apt-transport-https software-properties-common

Step 2: Add the GPG Key:

Add the GPG key for Grafana:

wget -q -O - https://packages.grafana.com/gpg.key | sudo apt-key add -

Step 3: Add Grafana Repository:

Add the repository for Grafana stable releases:

echo "deb https://packages.grafana.com/oss/deb stable main" | sudo tee -a /etc/apt/sources.list.d/grafana.list

Step 4: Update and Install Grafana:

Update the package list and install Grafana:

sudo apt-get update

sudo apt-get -y install grafana

Step 5: Enable and Start Grafana Service:

To automatically start Grafana after a reboot, enable the service:

sudo systemctl enable grafana-server

Then, start Grafana:

sudo systemctl start grafana-server

Step 6: Check Grafana Status:

Verify the status of the Grafana service to ensure it's running correctly:

sudo systemctl status grafana-server

Step 7: Access Grafana Web Interface:

Open a web browser and navigate to Grafana using your server's IP address. The default port for Grafana is 3000. For example:

http://<your-server-ip>:3000

You'll be prompted to log in to Grafana. The default username is "admin," and the default password is also "admin."

Step 8: Add Prometheus Data Source:

To visualize metrics, you need to add a data source. Follow these steps:

Click on the gear icon (⚙️) in the left sidebar to open the "Configuration" menu.

Select "Data Sources."

Click on the "Add data source" button.

Choose "Prometheus" as the data source type.

In the "HTTP" section:

Set the "URL" to

http://localhost:9090(assuming Prometheus is running on the same server).Click the "Save & Test" button to ensure the data source is working.

Step 9: Import a Dashboard:

To make it easier to view metrics, you can import a pre-configured dashboard. Follow these steps:

Click on the "+" (plus) icon in the left sidebar to open the "Create" menu.

Select "Dashboard."

Click on the "Import" dashboard option.

Enter the dashboard code you want to import (e.g., code 1860).

Click the "Load" button.

Select the data source you added (Prometheus) from the dropdown.

Click on the "Import" button.

- We can get the dashboard codes by searching on Google.

- Here we have a nice dashboard monitoring node exporter.

Similarly, we can do it for Jenkins and import the dashboard.

Phase 5: Jenkins Email Notification Configuration

Generate Gmail App Password:

Enable 2-step verification for Gmail.

Generate an app password for Jenkins to use in SNMP configuration.

Navigate to "Manage Jenkins" > "Email Notification" and configure the following settings:

SMTP server: Set it to

smtp.gmail.com.Use SMTP authentication: Enable this option.

Email ID and password: Provide your Gmail email ID and the app password generated for Jenkins.

Use SSL: Enable SSL for secure communication.

SMTP port: Set the port to

465.Test email: Verify the configuration by sending a test email to any other email ID.

- We got a successful test email.

Next, proceed to "Extended E-mail Notification":

SMTP server and port: Use the same SMTP server (

smtp.gmail.com) and port (465).Credentials: Utilize the credentials created earlier for authentication, ensuring SSL usage.

Save changes: Save the updated configuration.

Update the Jenkins pipeline with the post-stage to send emails on Job results

post {

always {

emailext attachLog: true,

subject: "'${currentBuild.result}'",

body: "Project: ${env.JOB_NAME}<br/>" +

"Build Number: ${env.BUILD_NUMBER}<br/>" +

"URL: ${env.BUILD_URL}<br/>",

to: 'amana6420@gmail.com',

attachmentsPattern: 'trivyfs.txt,trivyimage.txt'

}

}

- Our pipeline is running fine and completed the post-stage as well.

- We can validate if the mail has been received on email with Job results.